Launch week 2025

Custom Guidelines - AI enforced

Your rules, enforced by AI. Just describe them in plain language.

Launch week 2025

Your rules, enforced by AI. Just describe them in plain language.

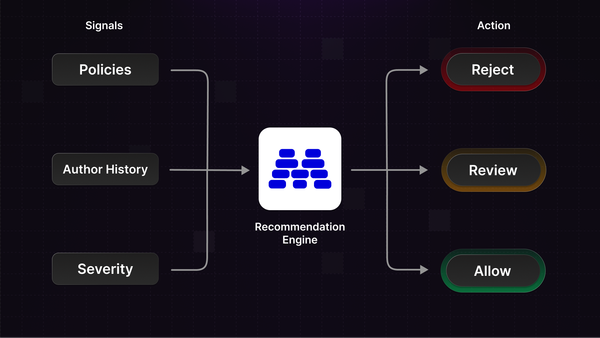

Stop treating every flag the same. Action Recommendations combine trust levels, severity scores, and policy signals to tell you when to allow, review, or reject content.

Launch week 2025

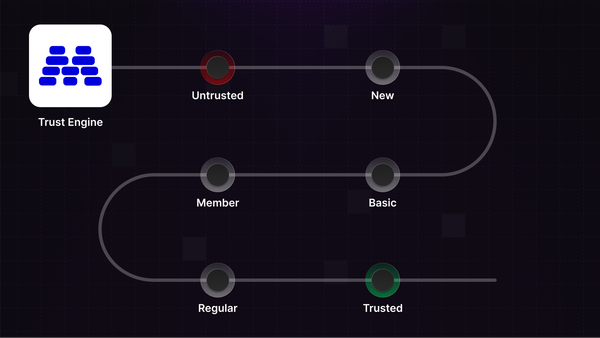

Moderation isn't only about finding bad actors. It's about recognizing who's trustworthy. Introducing Trust Levels.

Launch week 2025

Automatically detect and act on content about sensitive topics like religion, politics, and SHAFT categories.

Launch week 2025

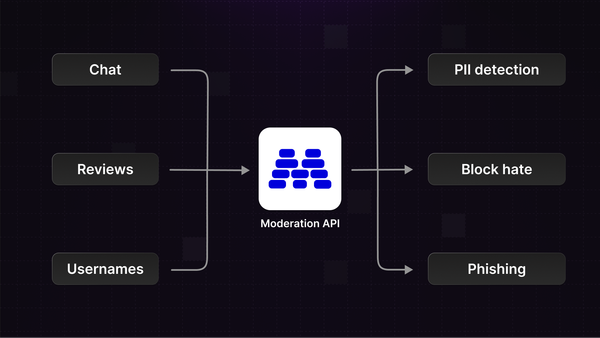

Scale moderation across chat, reviews, and profiles with content channels. Set distinct policies, separate review queues, and improve accuracy by declaring content types.

Updates

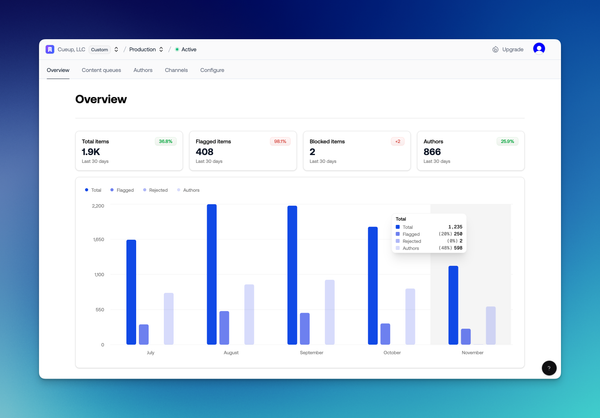

We've shipped two huge upgrades to make Moderation API faster to set up, richer in signal, and easier to maintain - and next week we’re launching something new every day (Dec 8-12). Revamped dashboard Next time you sign in, the dashboard will feel very different. We rebuilt

Tutorial

Users often come across inappropriate content, and it's crucial for social platforms to handle this scenario effectively. Allowing your users to report content builds trust, maintains a safe online environment, and ultimately improves the bottom line. But as your user base grows, managing these reports can become challenging.

Today we just rolled out a suite of new API endpoints designed to improve the experience with our Wordlists and Review Queues for enterprise plans. These enhancements offer greater flexibility if you're aiming to customise your moderation interface and leverage our robust moderation and review queue engine. There&

New model

The new image toxicity model adds a single but robust label for detecting and preventing harmful images. Where the image NSFW model can distinguish between multiple types of unwanted content, it can fail to generalise to toxic content outside of the provided labels. The toxicity model on the other hand

Until now, Moderation API allowed for the moderation of individual pieces of text or images. In practice, there’s often a need to moderate entire entities composed of multiple content fields. While one solution has been to call the API separately for each field, this approach can be inefficient and

You can now add smart wordlists that understand semantic meaning, similar words, and obfuscations in your Moderation API projects. When to use a wordlist In many cases an AI agent is a better solution to enforce certain guidelines as they understand context and intent, but wordlists are useful if you

Updates

Llama Guard 3, now on Moderation API, offers precise content moderation with Llama-3.1. It’s faster and more accurate than GPT-4, perfect for real-time use and customizable for nuanced moderation needs.