Object moderation endpoint

Until now, Moderation API allowed for the moderation of individual pieces of text or images. In practice, there’s often a need to moderate entire entities composed of multiple content fields. While one solution has been to call the API separately for each field, this approach can be inefficient and may overlook important contextual nuances.

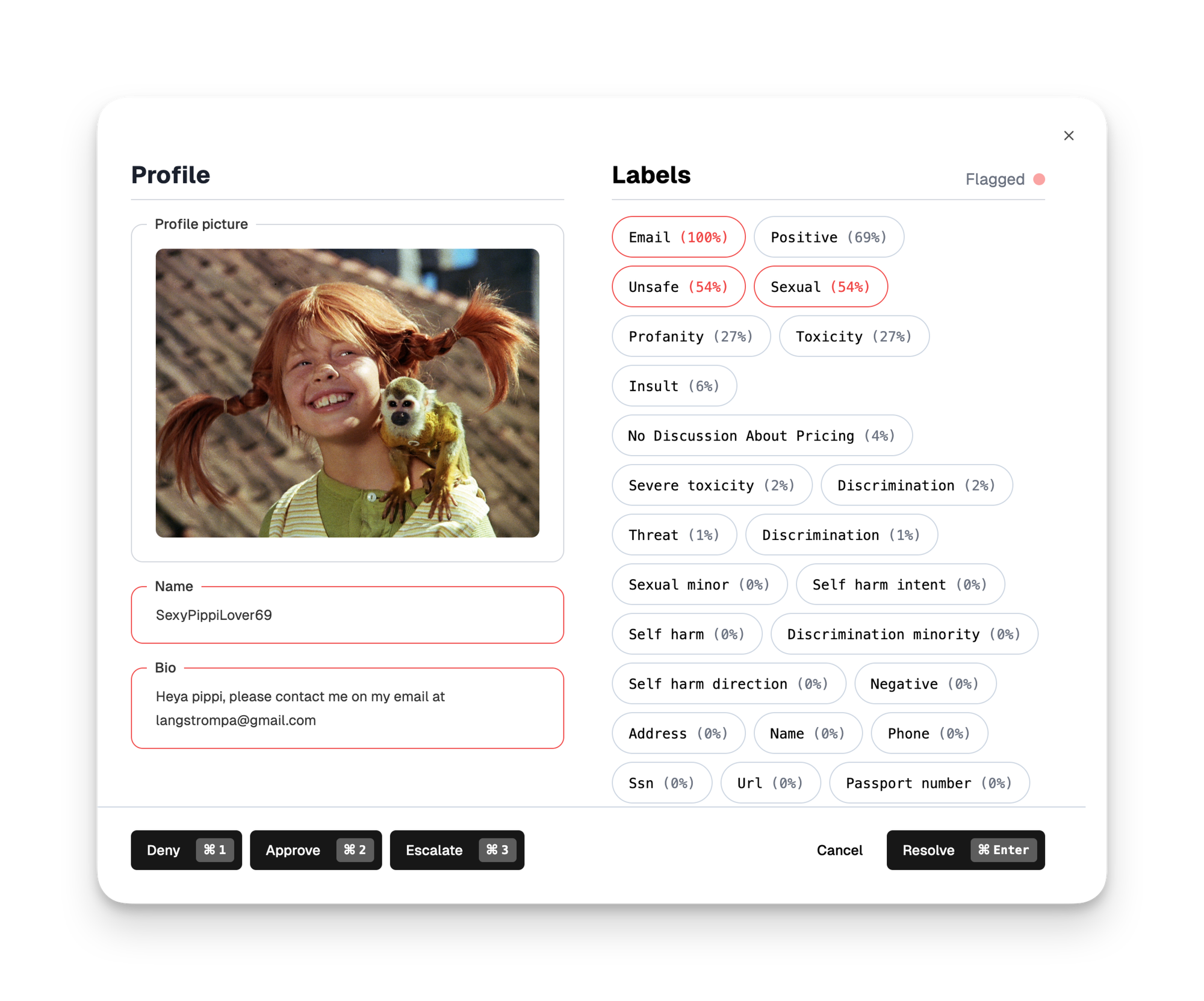

Today, we are excited to introduce the ability to moderate complete objects in a single call, offering greater efficiency, performance, and accuracy. This new feature supports four object types: profile, event, product, and object. When your content does not fit into one of the specified types, you can simply use object.

Some models leverage the additional context when moderating these objects, which enhances moderation precision. For instance, the criteria for reviewing an image linked to a personal profile may differ significantly from those for a product image, and some models can now account for these distinctions more effectively.

Review Queue Integration

Objects are now presented more comprehensively in your review queues. You’ll benefit from a unified view of each object and can swiftly identify which specific fields are triggering flags. This improved visibility allows you to address issues faster and with greater clarity.

Typescript SDK and OpenAPI

The new endpoint is also available in our Typescript SDK and the OpenAPI specs for easier implementation.

Please find further documentation for the object moderation endpoint here: