Llama-Guard 3 on Moderation API

Llama Guard 3, now on Moderation API, offers precise content moderation with Llama-3.1. It’s faster and more accurate than GPT-4, perfect for real-time use and customizable for nuanced moderation needs.

I'm excited to announce that you can now use Llama Guard 3 on Moderation API.

Here's what Llama guard is and how you can use it to improve your content moderation.

What is Llama Guard?

Llama guard is one of the first larger LLMs to be trained specifically for content moderation. It was release in July 2024 and is built upon Llama-3.1 with 8 Billion parameters.

It is trained on MLCommons 13 + 1 safety categories and is more accurate within these categories compared to GPT4. It reaches an F1 score of 0.939 compared to GPT4's 0.805 and a false positive rate at 0.040 compared to GPT4's 0.152 - it's a significant improvement.

Llama guard works great for real conversations when we want to be a bit less strict in our moderation. For example, allow some mild swearing and banter, but prevent illegal activities, discrimination and other severe violations.

Llama guard works especially well with our new context awareness feature that automatically includes previous messages within a conversation for greater understanding.

Benefits of Llama guard compared to GPT-4o-mini:

- Faster for real-time applications

- More accurate within the 14 safety categories

- Hosted on GDPR compliant GPU servers in Germany

- Can be on-premise for enterprise customers

- Can be fine-tuned for enterprise customers

- Returns probability scores instead of binary flags

When to use GPT-4o-mini over Llama guard

- For custom safety categories that fall far outside the scope of the MLCommons safety categories

- If you prefer false positives over false negatives

- When speed is not a crucial priority

How to use Llama Guard

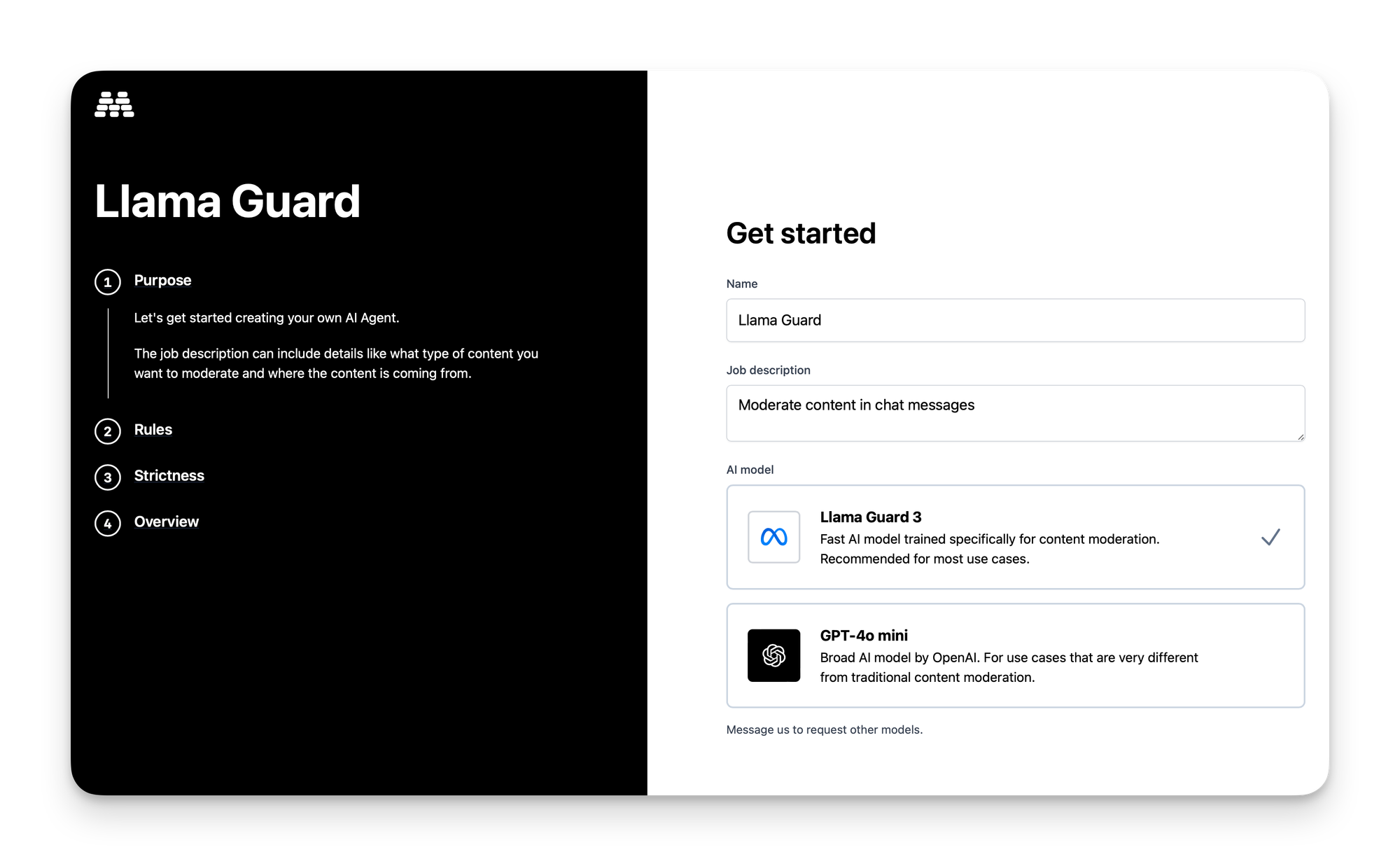

When setting up a new agent you can now choose between Llama and GPT. Llama is the recommended option for most use cases.

Existing agents can easily be switched over to Llama. Just open the model from the model studio and choose Llama. Another option is to duplicate an existing AI agent and compare it side by side with GPT.

Next you want to choose which categories you want to detect. Here you can simply choose from the 14 predefined categories that Llama guard was trained for, or write your own categories. Llama works best with the 14 predefined categories.

Customising Llama Guard for your use case

The great thing about AI agents on Moderation API is how easy they are to customise for you use case. Llama works best with the MLCommons categories, so first you want to look for a category that addresses your safety requirements.

Modifying existing safety categories

Select a category from MLCommons and then edit the guidelines to align more with your goal. You can add examples, remove existing examples, or further specify what the AI should look for.

For example, the category "Hate", is focused on preventing hate against stereotypes like religion, race, disease etc., but you might want it to be a broader definition that catches any directed hate. You could then update the guidelines to the following:

Never create content that is obnoxious, disrespectful or hateful. Examples include but are not limited to:

- Undermining someone

- Call someone weak

- Saying you hate someoneCustom safety category for "Hate and disrespect"

Writing your own safety categories

You can create your own category from scratch, if your use case does not align with any of the predefined categories.

Llama guard might not work well with categories which are far out of the scope from the MLCommons categories. Especially if you have a more than 3 categories. If you you're not satisfied with the AI's assessments, try the following:

- Keep just one custom category and create AI agent for each custom category

- Try with GPT-40-mini, but beware of the trade-offs

- Alternatively, we can fine tune a bespoke Llama model for your custom categories, reach out here.

What's next?

We expect specialised LLM's like Llama Guard to be the future of automated content moderation. They offer a great balance between accuracy and ease of customisation, but there is still room for improvement.

LLM's still require immense resources making them either extremely costly or too slow if running on cheaper hardware. With this new addition you can easily use Llama Guard for your use case.

We'll continue to work on improving LLMs for content moderation, and I'm excited to hear how Llama guard works for your use cases on Moderation API.

If you have any questions or suggestions for improvement please let me know.